TechRisk #143: Poison LLMs with small samples

Plus, Visa rollout protocol to verify AI shopping assistants, growing risk of using MCP servers, making LLM remembers, storing malware in blockchain, and more!

Tech Risk Reading Picks

Poisoning LLMs with small number of samples: A joint study by Anthropic, the UK AI Security Institute, and the Alan Turing Institute found that large language models (LLMs) can be “backdoored” with as few as 250 malicious documents. This is regardless of model size or total training data. The authors demonstrate that even very powerful models (trained on massive datasets) can be compromised by injecting a fixed, small number of poisoned documents, which cause the model to behave incorrectly only in the presence of a secret “trigger”. Their experiments show that success of the attack depends on the absolute count of poisoned samples, not the fraction of the training set, and that the same poisoning budget can work across models of very different scales. Tested across models from 600M to 13B parameters, the attacks succeeded equally well, revealing that poisoning effectiveness depends on the number, not the proportion, of malicious documents. The study highlights that data-poisoning threats may be more feasible than previously believed and the importance for scalable defenses and further research into mitigating such vulnerabilities in LLM training. [more][more-paper]

Visa rollout protocol to verify AI shopping assistants: Visa has launched its Trusted Agent Protocol, a new cryptographic security framework designed to help merchants distinguish legitimate AI shopping assistants from malicious bots amid a 4,700% surge in AI-driven retail traffic. The protocol enables merchants to verify approved AI agents. It vetted AI agents through Visa’s Intelligent Commerce program via a digital “trust handshake,” ensuring secure and transparent agentic commerce without overhauling existing systems. Developed with Cloudflare and aligned with emerging web standards, it positions Visa as a potential gatekeeper for AI-driven transactions, even as competitors like Google, OpenAI, and Stripe pursue similar standards. [more]

Broken guardrails: A new report by HiddenLayer exposes a flaw in OpenAI’s recently released Guardrails safety framework, part of its AgentKit toolset, which is meant to prevent harmful or unintended behavior in Large Language Models (LLMs). Guardrails uses LLM-based “judges” to detect and block issues like jailbreaks and prompt injections, but HiddenLayer found these safeguards could be easily bypassed because the same type of model acts as both the responder and the safety checker. Researchers demonstrated that they could disable safety detectors, manipulate confidence scores, and execute indirect prompt injections that could expose sensitive data. [more]

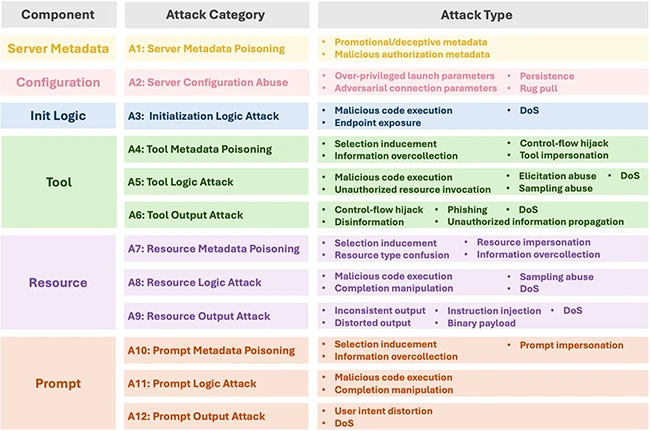

Growing risk of using MCP servers: Researchers have uncovered a major security vulnerability in how Large Language Model (LLM) applications use Model Context Protocol (MCP) servers to connect with external systems. These servers, designed to extend AI capabilities by enabling access to files, tools, and online resources, can be easily weaponized to hijack hosts, manipulate model behavior, and deceive users while evading detection. With over 16,000 MCP servers online and no standard vetting process, attackers can hide malicious code in seemingly legitimate tools. The study demonstrated twelve categories of MCP attacks, many with a 100% success rate, and found that existing scanners fail to detect most threats. [more]

Making LLM stores information: Palo Alto Unit 42 developed a proof-of-concept attack in which an adversary uses indirect prompt injection to surreptitiously corrupt the long-term memory of an AI agent (using Amazon Bedrock as an example). By tricking the agent into reading a malicious webpage, the attacker embeds instructions that, during the session summarization step, get stored in memory and later incorporated into the agent’s orchestration prompt. In future sessions, these injected instructions steer the agent’s behavior (e.g. exfiltrating user data), all without the user noticing. The authors emphasize this is not a flaw unique to one platform but a general vulnerability in systems using memory-augmented LLM agents. Mitigation strategies include filtering, input sanitization, URL restrictions, monitoring, and layered defenses. [more]

Overreliance on AI tools spell trouble: AI tools are transforming software development by automating repetitive tasks, generating high-quality code from natural language, and enhancing productivity, but they also risk diminishing developers’ hands-on expertise. As companies increasingly rely on AI coding assistants, smaller engineering teams can achieve more with less funding — yet overreliance may erode critical problem-solving and creative skills essential to senior developers. However, when used intentionally as interactive mentors, AI tools can blend automation with education, reinforcing learning and helping developers grow alongside the technology rather than being replaced by it. [more]

APT groups sophisticated use of AI: Russian hackers have escalated their use of AI in cyberattacks against Ukraine in H1 2025, employing it to craft phishing messages and even generate malware, according to Ukraine’s SSSCIP. Over 3,000 cyber incidents were recorded, marking a rise from late 2024, with AI-powered tools like WRECKSTEEL and Kalambur used in sophisticated phishing and data theft campaigns. Russia-linked groups such as APT28 and Sandworm exploited webmail flaws and abused legitimate platforms like Google Drive and Telegram to host or exfiltrate malicious content. [more]

AI assisted reconnaissance: AI is transforming the reconnaissance phase of cyberattacks by rapidly analyzing public-facing information such as login flows, APIs, map environments with speed and precision. While humans still guide offensive operations, AI dramatically enhances the efficiency, accuracy, and contextual understanding of information gathering, helping attackers prioritize targets and reduce false positives. [more]

F5 breach: F5 discloses breach tied to nation-state threat actor [more]

Web3 Cryptospace

$21M after private key compromised: A Hyperliquid user lost $21 million in crypto after their private key was compromised, with 17.75 million DAI and 3.11 million MSYRUPUSDP stolen. The crypto was quickly moved across Ethereum and Arbitrum wallets, including the Monero dark pool. [more]

$6.8M Bitcoin lost to a hack recovered: WinterMist, a blockchain investigation firm, successfully helped a Singapore-based trader recover $6.8 million worth of Bitcoin that had been stuck following failed Bitcoin-to-Tether swaps on Changelly. Despite the Bitcoin being confirmed on-chain, the USDT never reached the trader’s wallet, and Changelly offered only automated updates. WinterMist traced the funds using blockchain analytics, discovering they were trapped in an internal wallet due to incomplete compliance checks. Within 26 days, the firm secured a full refund, underscoring both the risks of crypto swaps and the growing importance of specialized asset-tracing services in resolving complex exchange issues. [more]

“Trusted contractor” retained unauthorized access to Wolf’s Ethereum bridge: DeFi project Wolf announced it has locked 57% of its total token supply, worth around $13 million, for two years following a $600,000 exploit. The exploit involved a contractor minting unbacked ETH-WOLF tokens without authorisation, and the early investor refused a lock-up plan. Wolf says both issues have been mitigated, the bridge fortified, and the token lock is intended to reduce selling pressure and signal long-term commitment. [more]

Storing malware in blockchain: Reports highlighted a sharp escalation in North Korean cyber operations, as state-sponsored groups like Lazarus increasingly exploit blockchain technology to conceal crypto-stealing malware through a method called “EtherHiding,” embedding malicious code within smart contracts and open-source libraries. First observed in 2023, this tactic has evolved into a sophisticated state-backed campaign, “Contagious Interview,” targeting blockchain developers, DeFi protocols, and NFT platforms, with the Bybit hack in February 2025 alone siphoning $1.46 billion in Ethereum. [more]

Contagious Interview: North Korean state-backed hackers have ramped up supply chain attacks on software developers with a campaign called “Contagious Interview,” using 338 malicious npm packages downloaded over 50,000 times. [more]